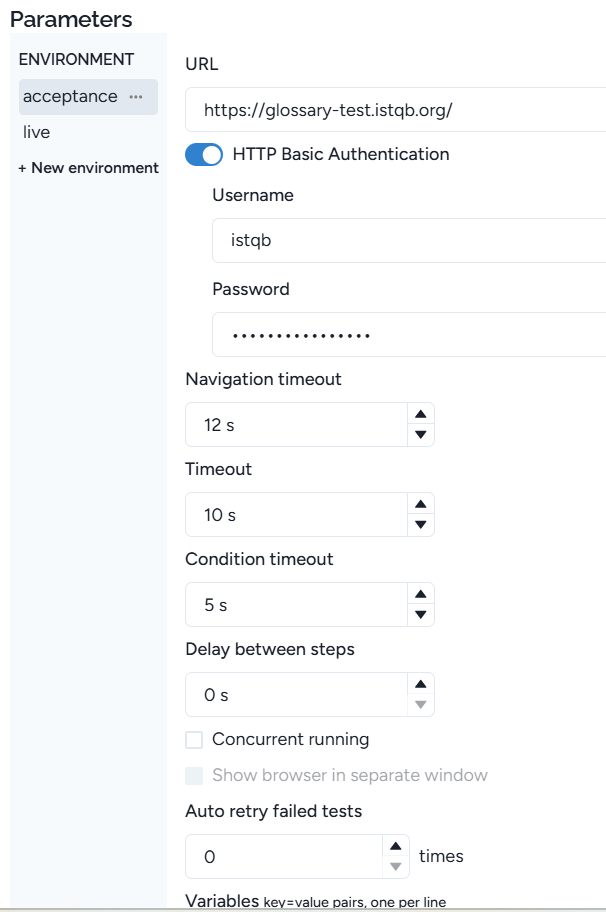

In the parameter window, you can set the URL of the software under test, the timeouts, variables, execution concurrency, and the environments:

One project consists of one URL. For a new project, don’t forget to set it.

You can set the username and password for HTTP basic authentication.

Navigation timeout is a time when you navigate (e.g. click a link) and the new page appears after some time. Timeout is for other cases, such as when a value is wrong. It is usually less than the navigation timeout.

As a default, if you execute the test cases for a feature or the project, the test cases are executed sequentially one by one. You can execute them in parallel, by checking ‘Concurrent running’.

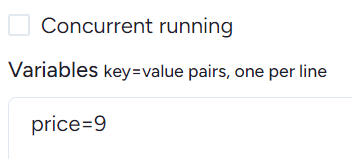

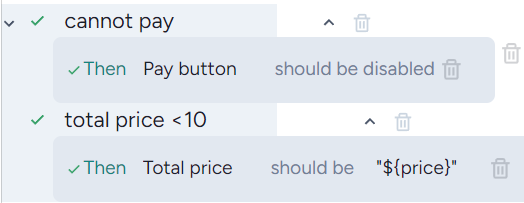

Different variables can be set for different environments. Just add a key-value pair:

and add the same key into a low-level step (use ${}):

Environment

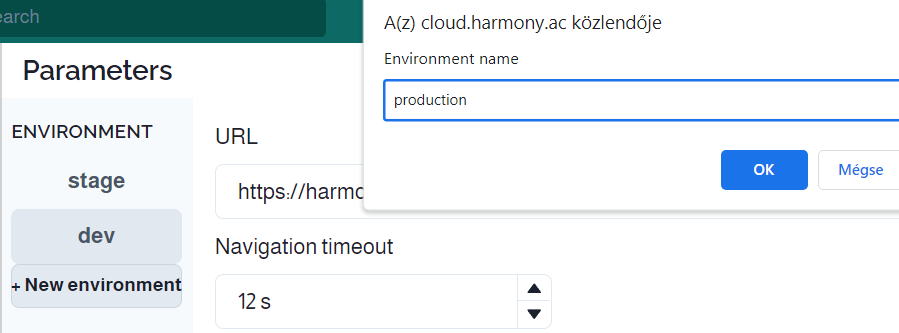

You can execute your tests on several environments. First, select the ‘Parameters’, then press ‘New environment’ and add your environment name (each name should be different):

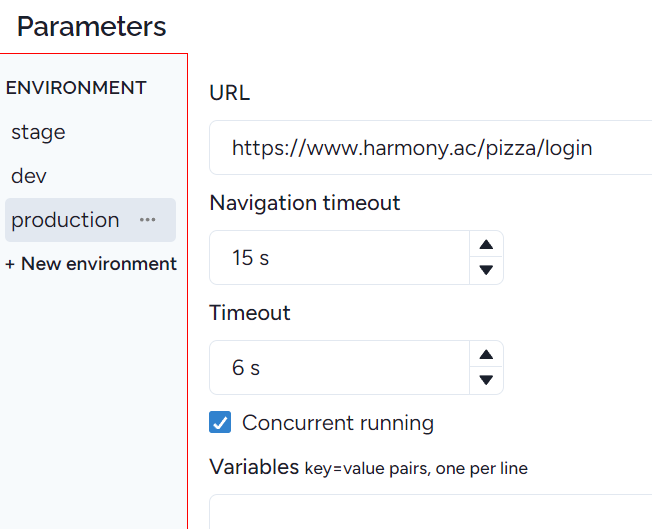

The result is a new environment (here production), where you can set a different URL, timeouts, etc. necessary for this environment:

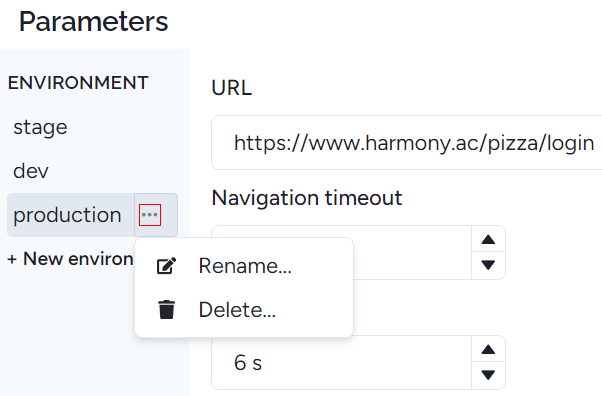

By pressing the three dots next to the environment name, you can modify or delete it:

Timeouts

Navigation timeout

A navigation timeout is a setting that specifies the maximum amount of time an automated test step will wait for a page navigation to complete before throwing an error. It prevents a step from getting stuck indefinitely if a page takes too long to load or a navigation event doesn't occur. Set it to 10-20 seconds.

Timeout

It prevents tests from failing prematurely or waiting indefinitely by synchronizing test steps with application behavior, handling delays, and managing asynchronous processes. Usually, 5 seconds is enough, but for example, testing Harmonz itself, we need 15 seconds.

Condition timeout

The time a conditional step waits. Usually, 2-3 seconds are enough.

Concurrent running

Four test cases are running in parallel, reducing the execution time by 50-75%. In some cases, tests cannot be executed in parallel. Therefore, let’s set Auto retry failed tests to at least one. In this case, after the parallel execution, the failed test cases will be executed one by one. Sometimes you need to increase timeouts. If more test cases fail than for non-concurrent execution, then don’t use it.

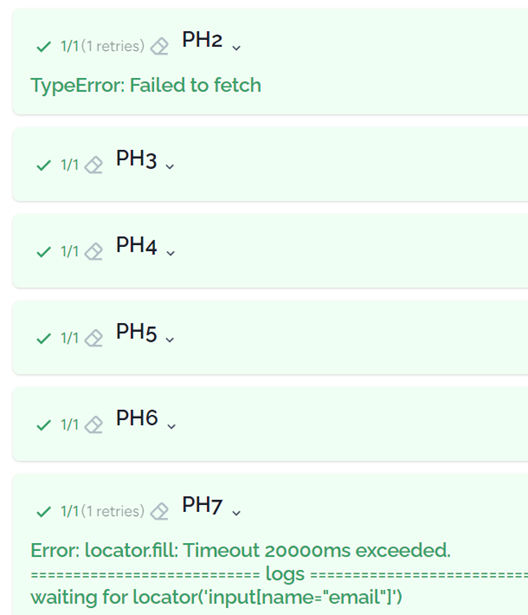

Auto retry failed tests - flaky test elimination

Test flakiness is considered one of the main challenges of automated testing. Fortunately, there is a significant difference between failed and flaky tests, namely, a failed test will always fail at the same execution point, but a flaky test may pass. If a flaky test passes, then the (part of) requirement covered by the test is correct. If so it’s enough to re-execute them to pass.

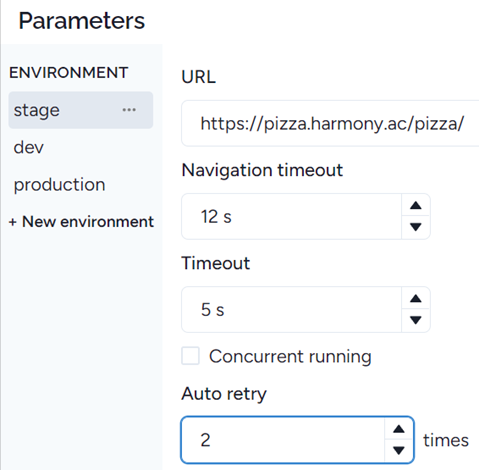

In Harmony, you can set the number of retries. If it’s 2, then failed test cases will be re-executed twice:

According to our experiences, one retry is usually enough. In the test report, you can check if a test case only passed during the retrial period, as the error is displayed but the test passed (green):